Quantum Computing Demystified: Exploring the Potential and Challenges

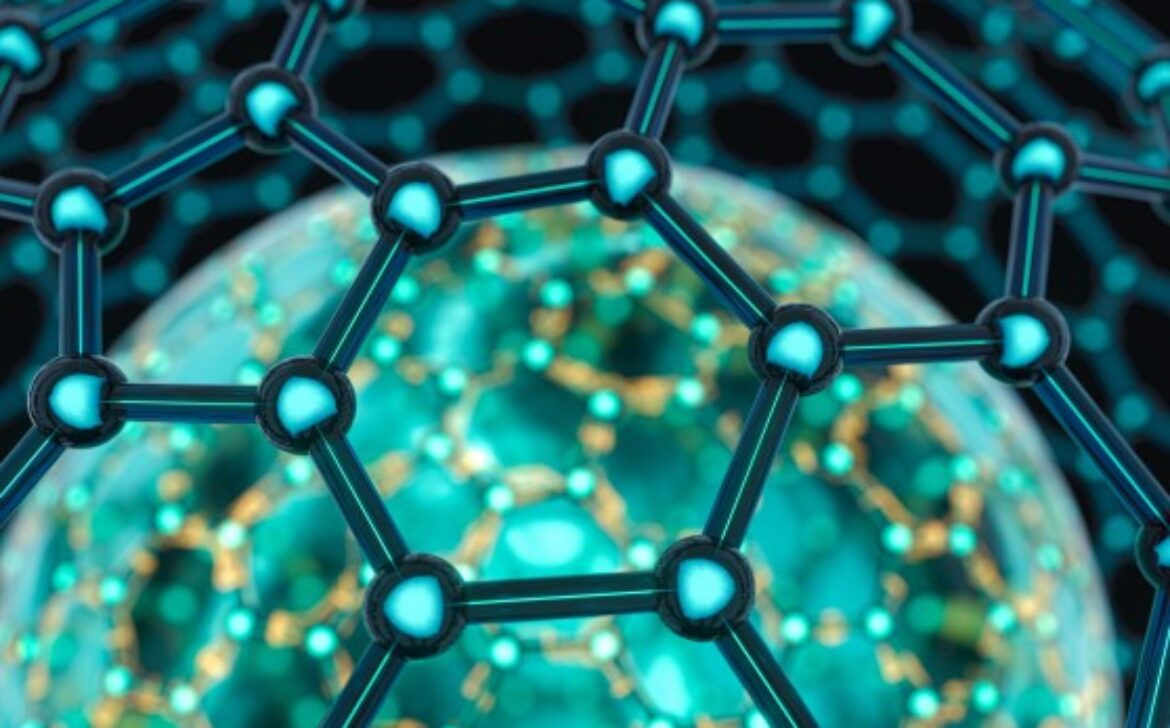

In the realm of cutting-edge technology, quantum computing stands as a frontier that promises to revolutionize the way we process information. Unlike classical computers that rely on binary bits, quantum computers utilize quantum bits or qubits, offering the potential to solve complex problems at unprecedented speeds. As this field gains momentum, it’s important to demystify the concept of quantum computing, explore its immense potential, and understand the challenges that lie ahead.

Understanding Quantum Computing

At its core, quantum computing harnesses the principles of quantum mechanics to process information. In classical computing, bits can be either 0 or 1. In contrast, qubits can exist in multiple states simultaneously, thanks to a phenomenon called superposition. This enables quantum computers to perform complex calculations that would take classical computers years or even centuries to complete.

Potential Applications

The potential applications of quantum computing span across various domains, including:

- Cryptography: Quantum computers have the potential to break conventional cryptographic codes, necessitating the development of quantum-resistant encryption methods.

- Optimization: Quantum computers can solve optimization problems with numerous variables more efficiently, which has implications for logistics, supply chain management, and financial modeling.

- Drug Discovery: The ability to simulate complex molecular interactions could accelerate drug discovery and lead to breakthroughs in medical research.

- Machine Learning: Quantum computers can enhance machine learning algorithms, enabling faster pattern recognition and data analysis.

- Climate Modeling: Quantum computing could simulate complex climate models more accurately, aiding in climate change mitigation strategies.

Challenges and Limitations

While the potential of quantum computing is immense, several challenges and limitations need to be addressed:

- Qubit Stability: Qubits are fragile and prone to errors due to their sensitivity to external influences. Maintaining qubit stability is crucial for reliable computations.

- Decoherence: Quantum states are highly sensitive and can quickly degrade due to interactions with their environment. This phenomenon, known as decoherence, hampers the accuracy of computations.

- Error Correction: Quantum error correction is essential to mitigate the impact of errors that naturally occur in quantum systems. Developing robust error correction methods is a significant challenge.

- Hardware Development: Building and scaling quantum hardware is a complex engineering task. Quantum computers need to operate at extremely low temperatures and be shielded from external influences.

- Programming and Algorithms: Developing algorithms that can take full advantage of quantum capabilities is a formidable challenge. Quantum programming languages and software frameworks are still in their infancy.

Current Progress

While fully functional, large-scale quantum computers are not yet a reality, significant progress has been made. Companies like IBM, Google, and Microsoft are working on developing quantum hardware and software platforms. Quantum computers with a few dozen qubits are now accessible via cloud-based platforms, allowing researchers and developers to experiment and learn.

Conclusion

Quantum computing holds the promise of solving problems that were once deemed unsolvable, transforming industries and sparking innovation. However, it’s essential to understand that quantum computing is still in its nascent stages, with technical challenges and limitations that need to be overcome. As the field advances, collaborations between researchers, engineers, and computer scientists will play a pivotal role in unlocking the true potential of quantum computing. While the road ahead is complex, the rewards could reshape the future of technology in ways we can only begin to imagine.